All Categories

Featured

Table of Contents

Amazon now typically asks interviewees to code in an online record file. Now that you know what concerns to expect, allow's focus on exactly how to prepare.

Below is our four-step prep prepare for Amazon information researcher prospects. If you're preparing for even more firms than just Amazon, then examine our basic data scientific research interview prep work overview. Most prospects fail to do this. Yet prior to investing 10s of hours preparing for an interview at Amazon, you ought to take some time to make certain it's really the ideal firm for you.

, which, although it's developed around software development, must give you a concept of what they're looking out for.

Note that in the onsite rounds you'll likely have to code on a white boards without being able to execute it, so practice creating via issues on paper. For artificial intelligence and statistics questions, provides on-line programs developed around statistical probability and other valuable subjects, some of which are totally free. Kaggle additionally uses free programs around initial and intermediate device learning, along with data cleansing, data visualization, SQL, and others.

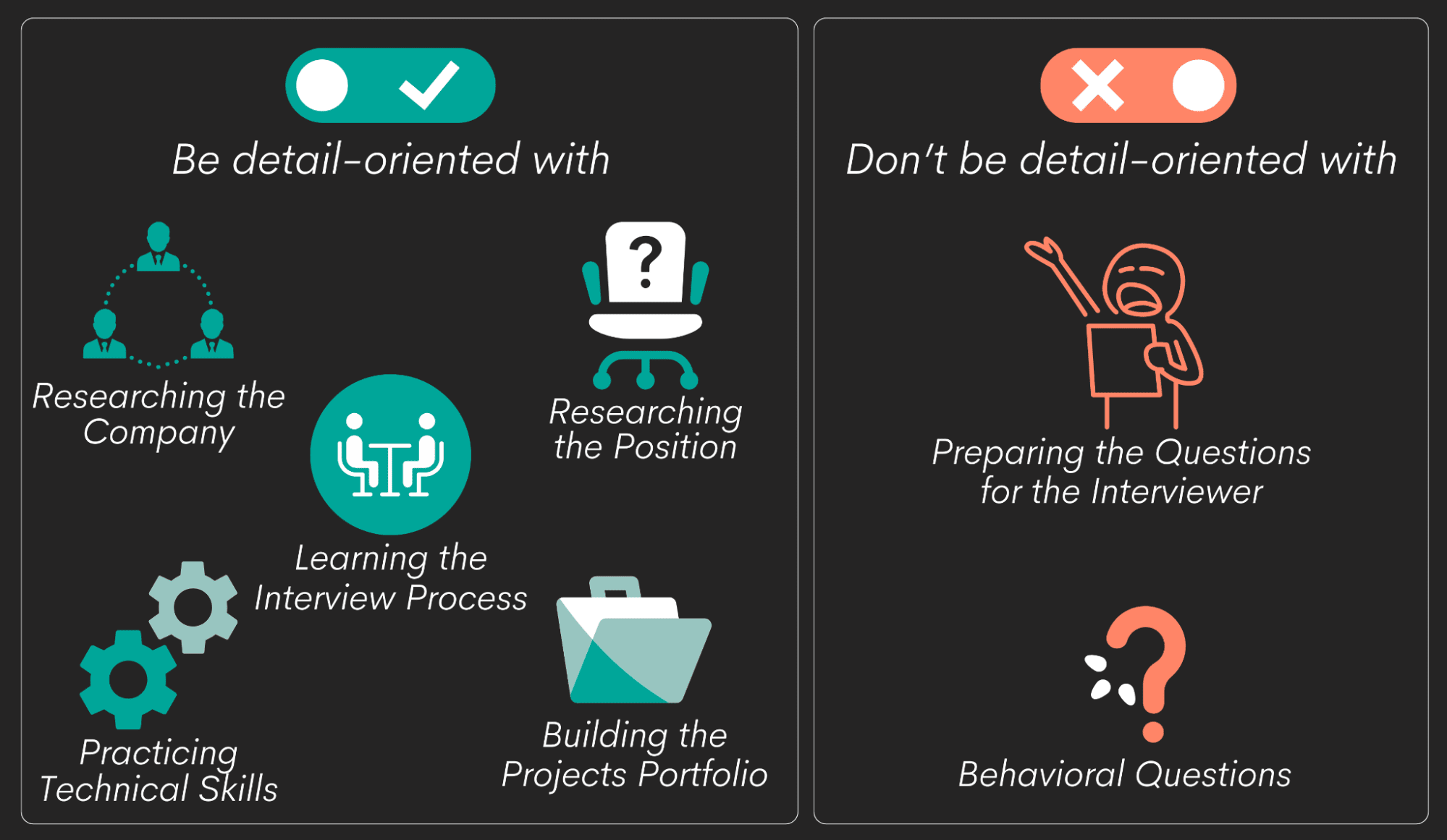

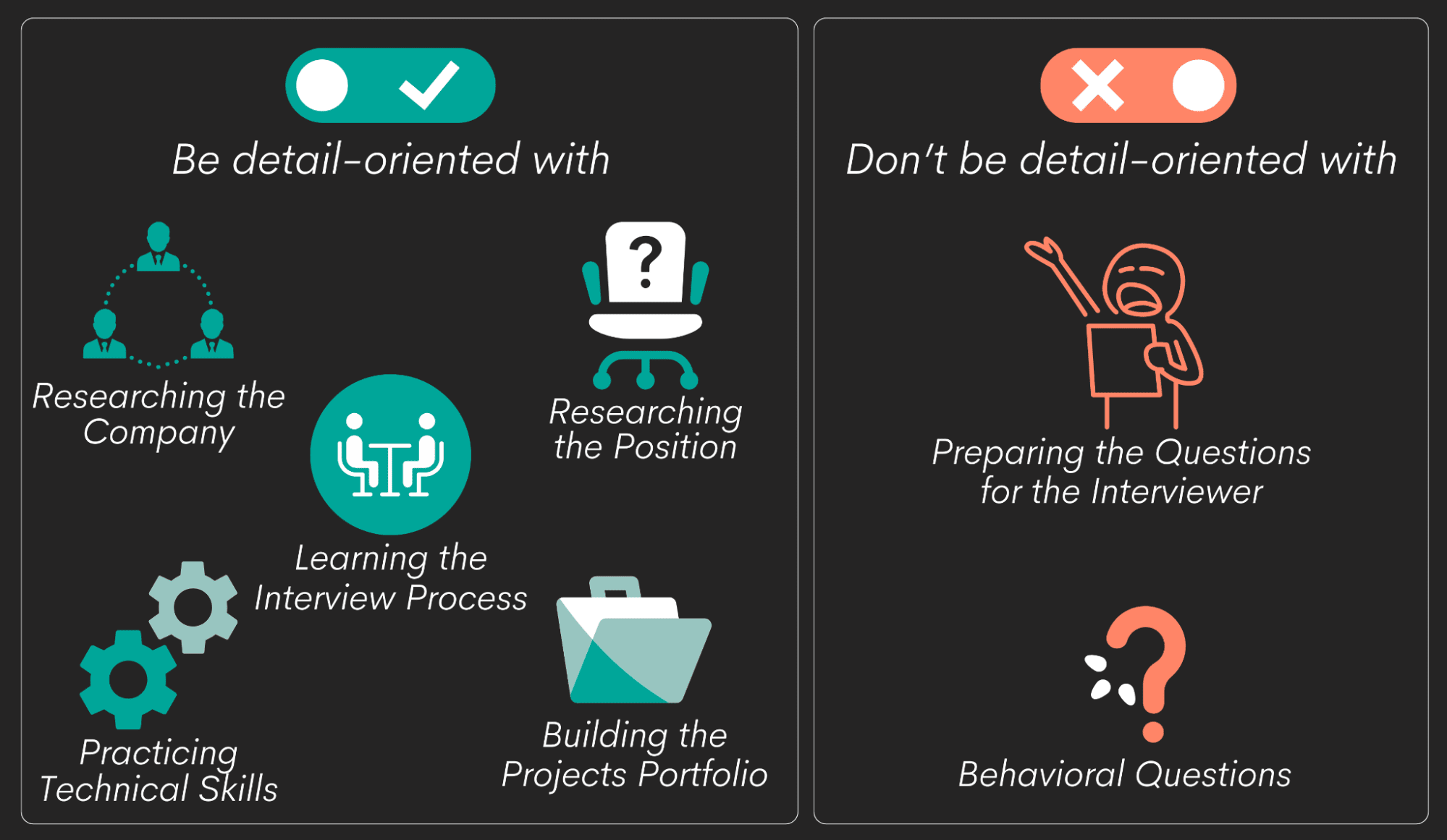

Key Insights Into Data Science Role-specific Questions

You can upload your very own inquiries and go over subjects most likely to come up in your meeting on Reddit's data and artificial intelligence strings. For behavior interview concerns, we suggest finding out our step-by-step approach for answering behavioral concerns. You can then make use of that approach to exercise responding to the instance inquiries offered in Area 3.3 above. Ensure you contend the very least one story or example for each and every of the principles, from a variety of positions and tasks. Finally, an excellent method to practice every one of these different kinds of inquiries is to interview on your own aloud. This might appear odd, yet it will considerably improve the way you connect your responses throughout a meeting.

One of the primary difficulties of information scientist interviews at Amazon is communicating your various answers in a way that's very easy to comprehend. As an outcome, we strongly suggest practicing with a peer interviewing you.

They're not likely to have expert knowledge of interviews at your target business. For these factors, lots of candidates skip peer simulated meetings and go straight to simulated meetings with a professional.

Key Insights Into Data Science Role-specific Questions

That's an ROI of 100x!.

Typically, Data Scientific research would focus on maths, computer system science and domain name knowledge. While I will quickly cover some computer system science principles, the bulk of this blog site will mostly cover the mathematical fundamentals one might either need to brush up on (or also take an entire course).

While I recognize the majority of you reviewing this are much more mathematics heavy naturally, understand the mass of information science (attempt I say 80%+) is accumulating, cleaning and processing information right into a valuable kind. Python and R are one of the most preferred ones in the Information Science area. I have actually likewise come throughout C/C++, Java and Scala.

Exploring Machine Learning For Data Science Roles

It is common to see the majority of the data researchers being in one of two camps: Mathematicians and Database Architects. If you are the 2nd one, the blog site will not assist you much (YOU ARE ALREADY INCREDIBLE!).

This could either be gathering sensor information, analyzing sites or bring out studies. After collecting the information, it needs to be transformed right into a usable form (e.g. key-value store in JSON Lines documents). As soon as the data is gathered and placed in a usable style, it is vital to perform some information quality checks.

Visualizing Data For Interview Success

Nonetheless, in instances of scams, it is very typical to have hefty class discrepancy (e.g. just 2% of the dataset is actual fraudulence). Such information is necessary to select the suitable options for feature engineering, modelling and version analysis. To learn more, check my blog site on Fraudulence Detection Under Extreme Course Inequality.

Typical univariate analysis of option is the pie chart. In bivariate evaluation, each attribute is contrasted to various other attributes in the dataset. This would consist of correlation matrix, co-variance matrix or my individual favorite, the scatter matrix. Scatter matrices permit us to locate concealed patterns such as- functions that need to be engineered with each other- functions that may require to be gotten rid of to stay clear of multicolinearityMulticollinearity is in fact an issue for multiple versions like direct regression and thus needs to be taken care of appropriately.

Think of making use of web usage information. You will have YouTube individuals going as high as Giga Bytes while Facebook Carrier users utilize a pair of Mega Bytes.

Another problem is the use of specific values. While categorical worths are common in the information scientific research globe, recognize computers can just understand numbers.

Key Skills For Data Science Roles

At times, having also many sparse measurements will hinder the efficiency of the model. An algorithm commonly utilized for dimensionality reduction is Principal Elements Evaluation or PCA.

The common categories and their below categories are described in this area. Filter methods are generally made use of as a preprocessing step. The option of features is independent of any type of maker finding out algorithms. Rather, functions are picked on the basis of their ratings in various statistical examinations for their correlation with the result variable.

Typical approaches under this group are Pearson's Correlation, Linear Discriminant Evaluation, ANOVA and Chi-Square. In wrapper methods, we try to make use of a subset of functions and educate a design utilizing them. Based upon the reasonings that we attract from the previous version, we determine to add or eliminate features from your subset.

Coding Interview Preparation

These methods are generally computationally extremely costly. Common techniques under this group are Ahead Option, Backward Elimination and Recursive Feature Removal. Installed techniques combine the qualities' of filter and wrapper methods. It's carried out by algorithms that have their own integrated feature selection approaches. LASSO and RIDGE are usual ones. The regularizations are given up the equations below as reference: Lasso: Ridge: That being said, it is to understand the auto mechanics behind LASSO and RIDGE for meetings.

Overseen Learning is when the tags are offered. Unsupervised Knowing is when the tags are not available. Get it? SUPERVISE the tags! Word play here meant. That being stated,!!! This mistake suffices for the recruiter to cancel the meeting. One more noob mistake individuals make is not stabilizing the features prior to running the design.

. Guideline. Linear and Logistic Regression are one of the most fundamental and generally made use of Device Understanding formulas out there. Prior to doing any type of analysis One usual interview blooper individuals make is starting their analysis with a much more complicated model like Semantic network. No question, Neural Network is very accurate. Criteria are important.

Table of Contents

Latest Posts

20 Common Software Engineering Interview Questions (With Sample Answers)

How To Talk About Your Projects In A Software Engineer Interview

How To Answer Business Case Questions In Data Science Interviews

More

Latest Posts

20 Common Software Engineering Interview Questions (With Sample Answers)

How To Talk About Your Projects In A Software Engineer Interview

How To Answer Business Case Questions In Data Science Interviews